Remote and On-site Interactive Museum Experience System

Introduction

This project presents a prototype design for a remote and on-site interactive museum experience system. The system enables remote and on-site visitors to share their museum experiences in real time. The target audience is users who are unable to visit Fife but wish to obtain a unique and engaging remote experience. As museum visits are interactive and exhibits are often accompanied by meaningful stories, the system incorporates a primary software interaction module (based on P5.js), an auxiliary feedback module (based on React & Expo), and a hardware input module (micro:bit) as a substitute for a mouse and keyboard. The interactive system is built around Fife's most important spring elements (cherry blossoms and colors) as exhibit themes, exploring the interactions with objects and nature, and creating virtual artistic experiences.

Concept

The museum and remote visitors are connected through a shared Firebase database. The purpose of this system is to convey the emotions and stories of the exhibits across distances. In addition to providing a basic remote experience for users who cannot visit the museum in person, the future vision of the system is to offer warmth and healing for those who cannot meet due to distance, cannot encounter due to time, or forget the spring in their lives. Therefore, emotional interaction is the primary goal of this system's design. The system mainly utilizes the p5.js drawing library to visualize objective entities such as museum lighting and buttons, while also receiving user input from the mouse, keyboard, and hardware devices, providing instant feedback and interaction. Moreover, the web interface developed based on the Expo framework not only digitally presents the museum's natural environment elements through images and texts but, more importantly, sends user experience data to the database. The core concept of the data is the binding of users' mood scores and colors, followed by the collection of textual information about user stories. This data helps to further expand the museum's interactive system in the future and provide a more diverse range of resources for the database. The following parts will discuss the system's stories, elements, visuals, functions, interactions, and future expansions from both software ends in detail.

System Design

User Story & Requirements

The remote museum provides an online visiting experience for users of different ages, cultural backgrounds, and interests. As it is not restricted by time and space, the diversity of remote users increases. In general, the core beneficiary users are the elderly and those with mobility difficulties, followed by families with children, students and educational academics, and artists and enthusiasts. There are main commonalities among these major groups.

01

A need for more intuitive and simpler interactive devices; the interaction methods should be convenient and easy to understand.

02

A need for gradual guidance and assistance, as well as materials to stimulate their enthusiasm and inspiration.

03

A need for more emotional and sensory interaction. For the primary beneficiary groups, microbit buttons will serve as the main input method while retaining the same functionality for mouse and keyboard input. In addition, due to the complexity and differences among the users, their individual needs should also be considered. Therefore, the design will maintain a certain degree of openness, exploratory nature, and fun, based on common requirements

System Design

Literature review & design ideas

Telling stories with data

Author - Driven

Reader - Driven

The Martini Glass Structure

As the museum's database digital resources consist only of text and numbers, constructing meaningful stories from abstract data is at the core of the design. "Telling stories with data" is also an important concept mentioned by Edward Segel and Jeffrey Heer (2010) in their work on narrative visualization. They analyzed various examples of narrative visualization, examined their strengths and weaknesses, and discussed how to combine design dimensions to create engaging and effective visual stories. They explored the design space of narrative visualization, identifying key design dimensions. The two most central concepts are visual narrative strategies and structural strategies. Visual narrative strategies are the visual elements that aid and facilitate the narrative. This category is divided into three important parts: visual structure, emphasis, and transition guidance. This design is based on these three elements to visualize the representation of numbers and text and organize interactive logic and arrangement of interactive elements and scenes.

Visual structure refers to the mechanisms that convey the overall narrative structure of the visualization to the audience and allow them to determine their position within the larger visualization organization. These design strategies help the audience with early positioning (establishing shots, checking lists, consistent visual platforms) and allow them to track their progress in the visualization process (progress bars, timeline sliders). Transition guidance also involves techniques for moving within or between visual scenes without disorienting the audience. Therefore, the interactive software end (p5.js sketch) of this design is also based on basic narrative strategies, establishing transitions between different pages or scenes through connected clues and adding chapters to help users keep up with their exploration (see Figure 2 and Figure 4). In addition, emphasis is the visual mechanism that helps the audience focus their attention on specific elements in the display. This can be achieved through the use of color, motion, framing, size, and audio to enhance the prominence of elements in relation to their surroundings. Since the data comes from multiple input sources, the abstract data has certain dynamism and immediacy. Therefore, in the design of elements within the scene, the use of animation (cherry blossom rain), size (flower blossoming), progressive colors (petals), random variation (colored lines), and activating music can more intuitively visualize the dynamic changes of data input.

In addition, narrative structural strategies determine the non-visual mechanisms that assist and facilitate the narrative (sequencing, interactivity, and information delivery). Among them, sequencing refers to the manner in which the audience is arranged to traverse the visualization. Sometimes this path is dictated by the author (linear), sometimes there is no suggested path at all (random access), and at other times, the user must choose a path among multiple options (user-directed). The advantages and disadvantages of different sequencing methods can be compared as in Table 1.

Considering the need to guide elderly and young users who may have difficulty using a mouse and keyboard while maintaining the system's exploratory nature, this system's design adopts the Martini Glass Structure to balance author-driven and reader-driven narratives. The Martini Glass visualization structure begins with an author-driven approach, initially using questions, observations, or written articles to introduce the visualization, such as adding dialogue boxes and clicking interactions on the page (see Figure 2 & Figure 3). Sometimes, no text is used, relying instead on interesting default views or annotations, such as the explorable body color capture view frames and button annotations in this system (see Figure 5). Once the author's intended narrative is complete, the visualization opens up to the reader-driven stage, where users can freely interactively explore the data. This structure resembles a martini glass, with the stem representing the single-path author-driven narrative and the mouth representing the transition to the reader-driven exploration.

In summary, this design will employ narrative interactive pages to convey the emotional aspects behind the exhibition to users. Within this design framework, we primarily utilize the color attributes of elements to create a virtual emotional experience for users. Numerous studies have shown that cognitive functions such as auditory, visual, color perception, and color associations can enhance remote users' sense of spatial presence, social intimacy, engagement, and enjoyment during their visit (Pisoni, 2020). At the same time, lighting color, as one of the main visual devices in museums, can facilitate bidirectional interaction between users and the museum through color and imbue psychological significance to it. This helps people focus on their inner emotional world and convey feelings, care, and love through non-verbal forms via the art of emotional connection.

Software: React & Expo

Software: p5. js

hardware: micro:bit

firebase & database

museum

Data Visualization

Aid User Experience

IOT

Feature Design & Prototype Implementation

Overall System Architecture

The entire interaction system consists of two software components, one hardware component, one remote database, and one Internet of Things (IoT) device system. The internal and external interactions of the entire system are illustrated and an overview of all the database groups used for writing and reading data is provided.

Feature Design & Prototype Implementation

Software - p5.js Sketch

01 Sakura Screen: Interactive Cherry Blossom Painting

The web page based on narrative visualization and designed using P5.js Sketch implements a continuous interactive web experience. The first scene features a customizable sketch-style background of a cherry blossom tree trunk. Users can use a mouse or micro:bit to generate cherry blossoms at custom or random positions, erase cherry blossoms (using an eraser function), and activate a scene with chapter-style guidance that triggers a shower of 99 cherry blossoms. The page displays statistics on the cherry blossoms created by users and transmits the user-generated cherry blossom count to a Firebase database. It then retrieves the stored cherry blossom count from the database on the museum end, enabling remote user sharing, creation, and sharing of cherry blossoms to spread love and beauty.

Figure 2 ) illustrates the Martini Glass Structure, which employs a chapter-based narrative strategy and written introductions to guide exploration

| Functions & UI elements

The buttons on the page have been aesthetically enhanced with icons, and the activation of the eraser is confirmed through a change in color. When activated, clicking on a cherry blossom will erase it. The slider allows customization of the size of the cherry blossoms. The cherry blossom button or micro:bit's Button A, within the rectangular area formed by the branches, positions the branches based on the pixel opacity of the background image and generates cherry blossoms at random locations. The cherry blossom rain button on the far right (or Button B) represents the final chapter of the guided experience. It triggers the dynamic falling and gradual enlargement of 99 cherry blossoms while playing a cherry blossom-themed music. The entire page aims to create a visually and audibly captivating experience, showcasing a beautiful and soothing cherry blossom tree.

Users engage in interaction with the museum end by generating cherry blossoms and interacting with the database through button clicks. Additionally, the museum's button sends different numbers based on different pressure levels, which serves as the IoT channel for cherry blossom generation on the museum end. The interactive system begins the exhibition with Fife's iconic cherry blossom tree scenery. Dialog boxes guide users to the next page, and there are also direct links at the bottom of the page for users to explore freely based on their preferences.

Figure 3) depicts the Martini Glass Structure with dialogue boxes and interactive clicks

| Transition Scene: Cherry BlossomClues Falling

Figure 4) Transition of Cherry Blossom Clues

As the transition links are placed at the bottom of the page, the transition scene will feature cherry blossoms gradually floating up from the bottom with animated effects of rotation and enlargement. At this point, a dialog box will appear, guiding the user to the next exhibition, and a request to open the camera will be made. When the user clicks on the dialog box to start the experience, the cherry blossoms will gradually fade and disappear from the page, leading to the transition to the next page. This scene uses cherry blossoms as continuous visual clues, showcasing a coherent narrative structure and spatial experience.

02 Physical Color Screen:Monet's Abstract Painting of the Body

By capturing the current pixel color from the camera and converting it to the HSV format, we can analyze the hue, saturation, and value of the color. Based on these values, we can define the width of three randomly generated lines. With this approach, we can generate colors that correspond to the clothing color of the body, creating a personalized three-color card that represents each user's physical attributes.

Figure 5): Guided Operations with Annotations and Prompts

|Changes in Button State

|Artwork can be downloaded by

pressing button B on the microbit

or “S” on the keyboard.

In terms of interactivity with users, different buttons or keys can be used to erase or download the generated abstract impressionist-style paintings. The element of fun in this interaction will benefit various groups. It can be seen as a family game where participants search for colors around them to create artwork. It can also serve as a means of artistic expression, offering new creative possibilities for art enthusiasts. Furthermore, for the elderly and individuals with limited mobility, this interactive device requires minimal physical effort. They can simply stand in front of it and still create artistic and meaningful paintings.

Regarding the interaction with the museum, the physical colors received from users can be transmitted to a light bulb through a button press, serving as a registration of their visit. This value will be stored in a database and retrieved on the next page. Once we have obtained the user's physical color, it needs to be complemented with the user's psychological color, representing their current mood. This aspect will be further elaborated in the Expo section. Clicking on "Send Your Physical Color" will open the next interface.

03 Mental Color Screen:Emotional Flowers

The page features two buttons that can bloom randomly generated flowers representing the body and emotional colors. The color information of the flower petals is retrieved from the database, specifically the most recent three groups of arrays within the corresponding group ID. Each column element is bound to establish the starting color, while the color with the lowest saturation on the hue is set as the endpoint to gradually divide the color space. Finally, a central flower bud with the starting color is generated, and the petals gradually bloom from the starting color. These two buttons can also be replaced by Button A and B on the micro:bit. When the generated flowers reach a threshold of 200, it activates the final poem of the performance. All the flowers will instantly blur at the edges, and a dialog box indicating the end will appear on the page.

Figure 6) sequentially depicts flowers representing body color, flowers representing emotional color, flowers representing emotional color that reaches the threshold, and the accompanying poem.

Feature Design & Prototype Implementation

Software - React & Expo

Expo primarily functions as feedback software consisting of six interfaces: the main interface, environment display interface, message interface, storyboard interface, mood card interface, and color selection interface. The latter four interfaces serve as the main feedback and input components, with the last two interfaces being linked and used in conjunction with p5 for selecting emotional colors. Although the values from these input components have not been effectively visualized yet, their significance is profound, which will be discussed in future expansions.

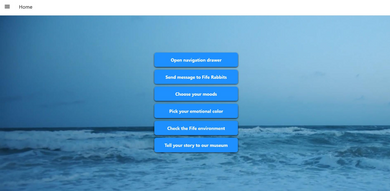

Home Screen:DrawerNavigation and Fife's Ocean Video Background

On this page, the focus is primarily on importing and referencing UI libraries for buttons and themes. Additionally, an iconic wave video background, similar to cherry blossoms, has been added. When clicking on buttons on the main page, there will be a change in opacity and navigation to the corresponding pages. Similarly, opening the drawer menu allows for selecting the desired navigation options.

Message Screen: Leave a Message for Fife's Little Bunny

On this page, users can leave messages for the little bunny. The system captures the ten most recent messages and displays them as a public message board. The buttons and input fields have also been optimized for usability.

Weather Screen: Check the Museum's Environment

This screen displays the indoor and outdoor temperature and humidity in the form of cards. Icons are incorporated into the cards, and they are designed with rounded corners and shadows. Below the cards, there is an image of a green grass field, and when the bunny is in contact, it appears on the screen. Story Board: Send Your Story Form to the Library This interface is designed to collect user stories for the backend. The form has undergone a series of optimizations and designs. The corresponding text is sent to the specified group.

Moods Card: Choose Your Emotional State and Feelings

This is a grid of nine cards created based on the nine-point emotion scale. Each card corresponds to an emotion and is initially assigned a specific color. Clicking on a card sends a numerical value (1-9) to the database to mark that emotion. The corresponding emoji icon and animation are displayed on the page. Clicking on the "Select Color" button navigates to the color selection page, where the user can choose their psychological color. Color Select: Choose a Colorfor Your Selected Emotion Card Dragging the bar allows for selecting different color attributes. The generated color attributes are sent to the database and received by the p5 end. They are ultimately visualized on the Mental Color Screen page.

Evaluation

Software - p5.js Sketch

The P5 interface has implemented an interactive web page with a complete loop. It retains exploratory elements and adaptability for multiple users while guiding user interactions. One limitation is that the current input functionality heavily relies on mouse and keyboard interactions. To further improve the experience for older adults, children, and individuals who cannot easily use a mouse or keyboard, more work is needed in developing and exploring hardware properties and sensors. Additionally, while text-based clues are clear and detailed, they pose difficulties for individuals who are illiterate or visually impaired. This highlights the need to develop more sensory interactions beyond just visual cues. The interactive exhibition visualizes abstract colors, offering a new visual approach to user profiling in museums. However, for individuals with color recognition difficulties, color selection may present challenges. Therefore, it is important to consider mapping colors to other sensory modalities. The association between colors and emotions is a good starting point, but different individuals may have different color experiences. For example, synesthetes may perceive colors in a more fantastical way. Exploring how to map color visualization to richer forms of objects is a promising direction for future expansion. The system is not limited to single-channel interaction and enables bidirectional interaction between software and hardware components. However, data capture may be affected or crowded by inputs from other software components. To address this, creating a unique account for each remote user entering the museum is necessary to accurately and timely reflect input visualizations and interactions.

Software - Expo Sketch

Expo has implemented a complete interface, but due to time constraints, the focus was mainly on the interaction functionality of a single software component. As a result, there are still areas that can be further improved. However, the primary functionality of mood selection and cards is well-developed. Although the program sends the values of the card's scales to the database and also sends the color attribute values, one limitation is that these two sets of attributes are not packaged and sent together, potentially leading to matching errors. Analyzing user experiences and data mining related to colors and emotions is an interesting and thought-provoking area. For example, while users are prompted to associate colors with emotions, it is unknown whether their actual feelings truly align with the cards. Therefore, it might be worth considering adding input fields or keywords on the cards to collect users' emotional textual feedback. Moreover, emotions are often complex and multifaceted. Consideration can be given to combining two cards, like puzzle pieces, to account for more diverse emotional combinations. Additionally, the museum's feedback system may require additional features such as displaying attractions and implementing voting, filtering, and responding to messages. For user text input, some visual prototypes have been explored (see appendix), but due to limited input restrictions in the database, there is a possibility of receiving invalid text, resulting in less efficient visualizations. Furthermore, the environment page only presents temperature and humidity as numbers. It may be worth considering incorporating D3 visualization to depict differences and changes in temperature and humidity. Alternatively, visualizing these factors directly on the interactive page could be explored. For example, temperature and humidity could affect the color and blooming of cherry blossoms, the background and appearance of the rabbit, or even reflect in the rising and falling tide of the ocean background on the Expo's home page.

Hardware - micro:bit

As discussed in the P5.js section, it is indeed worth considering the use of more advanced sensors in micro:bit. We have also explored using the X, Y, and Z values of the accelerometer to control the changes in two- or three-dimensional color properties. However, based on simple user testing and feedback, we found that the sensitivity was too low and that it was difficult for users to accurately select the desired colors, especially for elderly individuals with limited dexterity. This significantly contradicted the user's needs. Therefore, selecting colors by dragging along a bar is a more suitable input method, as it provides accuracy and simplicity.

Future Work/Extensions

1.User Profile Color Blocks: Body Color Card + Emotional Color

One prominent characteristic of remote users is their anonymity and sense of blurred roles. This is beneficial in eliminating divisions and biases based on identity, race, and gender, and it also makes it easier for individuals to express themselves and engage in emotional expression and interaction (Clarke & Costall, 2008; Beale & Creed, 2009). However, it also poses challenges in identifying user profiles and recognizing emotions and cultural factors related to the exhibition. One effective approach is to use color blocks to represent users, where the outer layer represents objective identity characteristics, while the core represents the soul and emotions through colors. This method, combined with the analysis of emotional data, can represent internalized user characteristics.

2. Data Mining and Visualization of Emotional Types(Attributes) and Color Combinations, Text Binding

The exploration of data mining and visualization involves understanding the emotional types (attributes) and color combinations, as well as binding them with textual information. By analyzing and visualizing this data, we can gain insights into the relationships between emotions and colors (Hemphill, 1996; Liu & Luo, 2016; Thorstenson et al., 2018), enhancing the understanding of users' emotional experiences.

3.Multisensory Color Synesthesia, MultiformColor Representation and Interaction

Exploring multisensory color synesthesia involves leveraging multiple senses (Suk & Irtel, 2010 ; Arroyo et al., 2011 ) to create associations and experiences related to colors. Additionally, considering multiple objects as color projections allows for a richer color representation (Nijdam, 2009). By expanding the forms of color representation and interaction, we can provide diverse and engaging experiences for users.

4. Enhancing Input Functionality on theHardware End and EnablingHardware-to-Hardware Interaction

Improving the input functionality on the hardware end is essential to cater to different user needs and capabilities. Additionally, enabling interaction between different hardware components opens up possibilities for collaborative and immersive experiences (Kortbek & Grønbæk, 2008).

References

Arroyo, E., Righi, V., Tarrago, R., & Blat, J. (2011). A remote multi-touch experience to support collaboration between remote museum visitors. In Human-Computer Interaction-INTERACT 2011: 13th IFIP TC 13 International Conference, Lisbon, Portugal, September 5-9, 2011, Proceedings, Part IV 13 (pp. 462-465). Springer Berlin

Heidelberg

Beale, R., & Creed, C. (2009). Affective interaction: How emotional agents affect users. International journal of human-computer studies, 67(9), 755-776.

Clarke, T., & Costall, A. (2008). The emotional connotations of color: A qualitative investigation. Color Research & Application: Endorsed by Inter-Society Color Council, The Colour Group (Great Britain), Canadian Society for Color, Color Science Association of Japan, Dutch Society for the Study of Color, The Swedish Colour Centre Foundation, Colour Society of Australia, Centre Français de la Couleur, 33(5), 406-410.

Nijdam, N. A. (2009). Mapping emotion to color. Book Mapping emotion to color, 2-9.

Kortbek, K. J., & Granbaek, K. (2008, October). Communicating art through interactive technology: new approaches for interaction design in art museums. In Proceedings of the 5th Nordic conference on Human-computer interaction: building bridges (pp. 229-238).

Pisoni, G. (2020). Mediating distance: New interfaces and interaction design techniques to follow and take part in remote museum visits. Journal of Systems and Information Technology, 22(4), 329-350.